TAMU Datathon

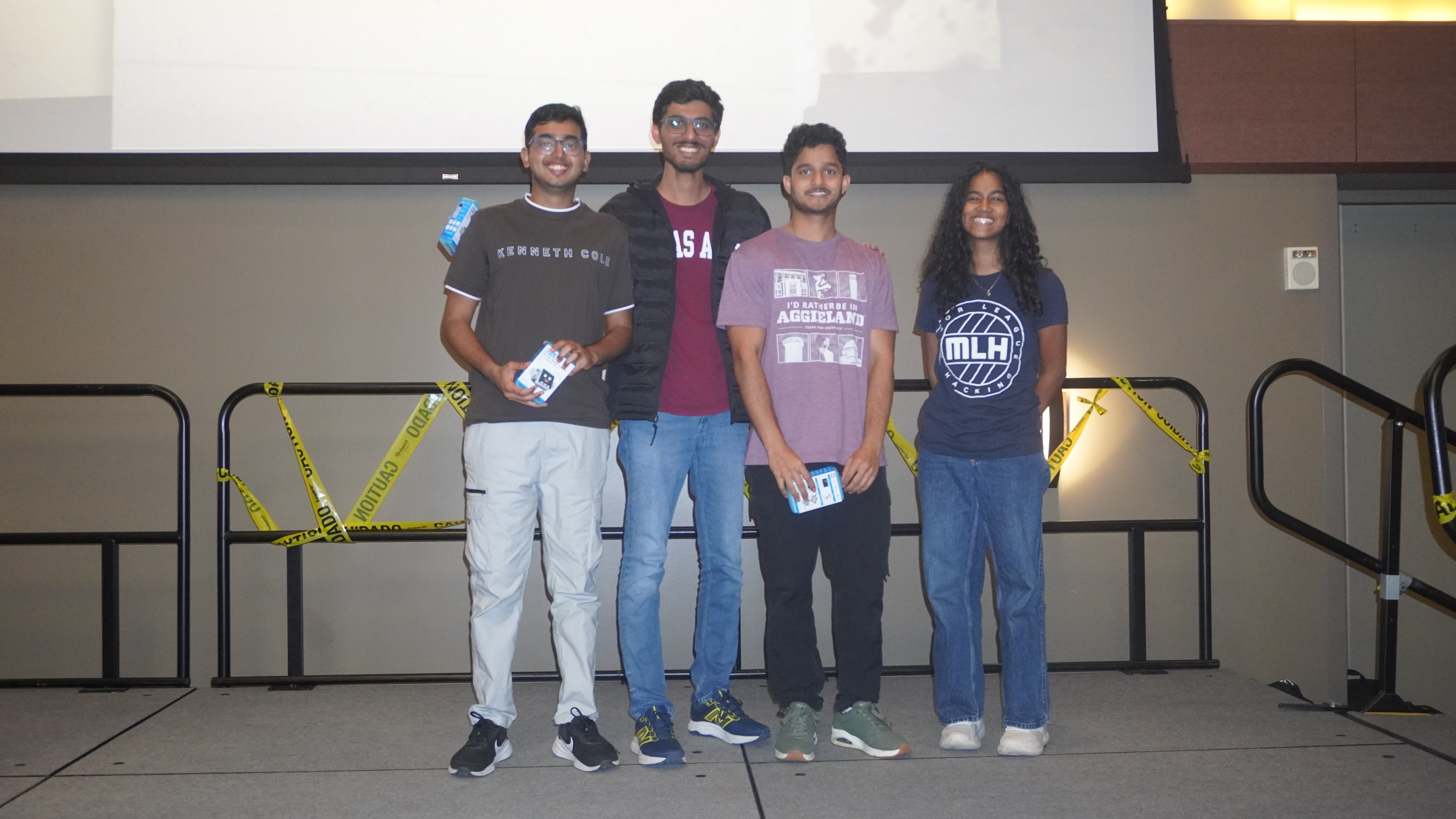

🏆 Achievement: Best Use of MongoDB

Won the Best Use of MongoDB award at TAMU Datathon for our project G-3, an intelligent Google Workspace assistant that unifies 20+ Google services into one natural language interface.

Our innovative use of MongoDB for context management and conversation history enabled the assistant to maintain continuity across sessions, making follow-up questions like "that meeting" or "the document I created" work naturally.

Project: G-3 - Intelligent Google Workspace Assistant

💡 Inspiration

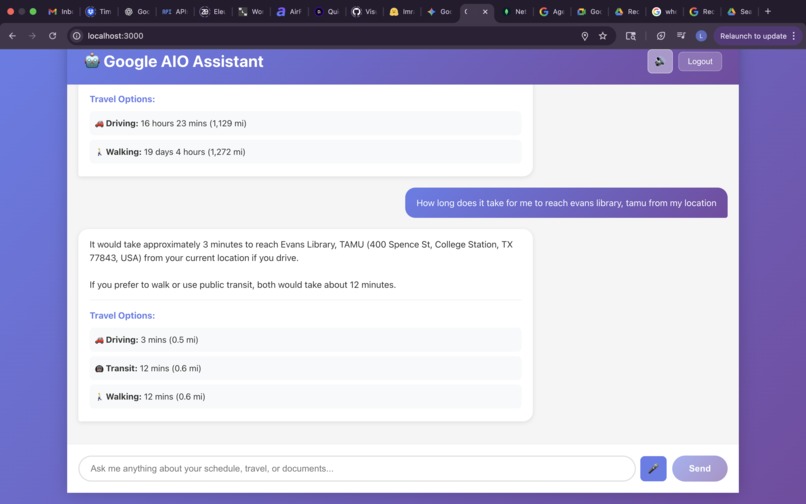

We were frustrated by switching between multiple Google apps (Calendar, Gmail, Drive, Maps, etc.) to complete simple tasks. We wanted a single conversational interface that could access all Google services and understand context across them—like asking "How long to my next meeting?" and getting an answer that checks your calendar, calculates travel time, and suggests when to leave—all in one conversation.

⚡ What it does

G-3 is an intelligent Google Workspace assistant that unifies 20+ Google services into one natural language interface. Users can ask questions or give commands in plain English, and the assistant automatically:

- Manages your schedule: View, create, edit, and delete calendar events; calculate travel times to meetings

- Handles communications: Search and read Gmail, find contacts, create Google Meet links

- Creates content: Generate Google Docs, Slides, and Sheets on the fly

- Organizes work: Manage Google Tasks, create and search Google Keep notes, find files in Drive

- Provides information: Search the web, get weather forecasts, find nearby places, check air quality, get timezone info

- Manages forms: Create Google Forms with questions, edit questions, view responses

- Entertainment: Search YouTube videos, get video details, access playlists

The assistant uses MongoDB to remember conversation context, so follow-up questions like "that meeting" or "the document I created" work naturally. It also features voice input (Web Speech API) and text-to-speech output (ElevenLabs) for hands-free interaction.

🛠️ How we built it

- Frontend: React with a chat interface that supports voice input and audio playback

- Backend: Express.js server with modular service architecture

- AI Engine: Google Gemini 2.5 Flash with function calling to route queries to the right services

- Context Management: MongoDB stores conversation history, user context, and preferences to maintain continuity across sessions

- Google APIs Integration: 20+ service integrations including Calendar, Gmail, Drive, Docs, Sheets, Slides, Maps, Tasks, Contacts, Meet, Keep, Forms, YouTube, Places, Timezone, Weather, Air Quality, and Google Search

- Speech: ElevenLabs API for natural-sounding text-to-speech responses

- Architecture: Model Context Protocol (MCP) for structured communication between the AI and services

- Authentication: OAuth 2.0 for secure Google account access

🏆 Accomplishments

- ● Best Use of MongoDB Award - Recognized for innovative context management

- ● Full CRUD operations across all integrated services

- ● Context-aware conversations powered by MongoDB

- ● 20+ Google service integrations successfully implemented

- ● Intelligent routing with Gemini function calling

- ● Complete voice-first experience with Web Speech API and ElevenLabs

💡 Key Challenges Overcome

- ● Managing 20+ Google API integrations with different authentication and rate limits

- ● Building robust context management system in MongoDB

- ● Getting Gemini to reliably choose the right tools for complex queries

- ● Syncing voice input/output while maintaining conversation flow

- ● Implementing smart caching and request batching for rate limits

📚 What we learned

- Function calling with LLMs: Gemini's function calling is powerful but requires careful tool definitions and system instructions

- Context is king: Storing conversation history in MongoDB dramatically improved the assistant's ability to handle follow-up questions

- API integration patterns: Building a consistent abstraction layer across all services taught us about API design

- Error resilience: Building systems that degrade gracefully when services fail is crucial for user experience

- Voice UX challenges: Voice interfaces need different UX patterns than text—audio feedback, clear error messages, and handling interruptions

- MCP protocol benefits: Using a structured protocol made it easier to add new services and debug issues

- Rate limit management: Proactive caching and request optimization are essential when working with multiple rate-limited APIs

🚀 What's next for G-3

- Enhanced NLP for more natural, conversational interactions

- Multi-user support with team workspaces for collaboration

- Automation workflows for custom tasks like weekly reports

- Advanced calendar features with smart meeting scheduling

- Full email composition capabilities with smart drafting

- Direct document editing through natural language commands

- Integration expansion to more Google services and third-party tools

- Native mobile apps for iOS and Android

- Better voice recognition with multi-language support

- Analytics dashboard for productivity insights