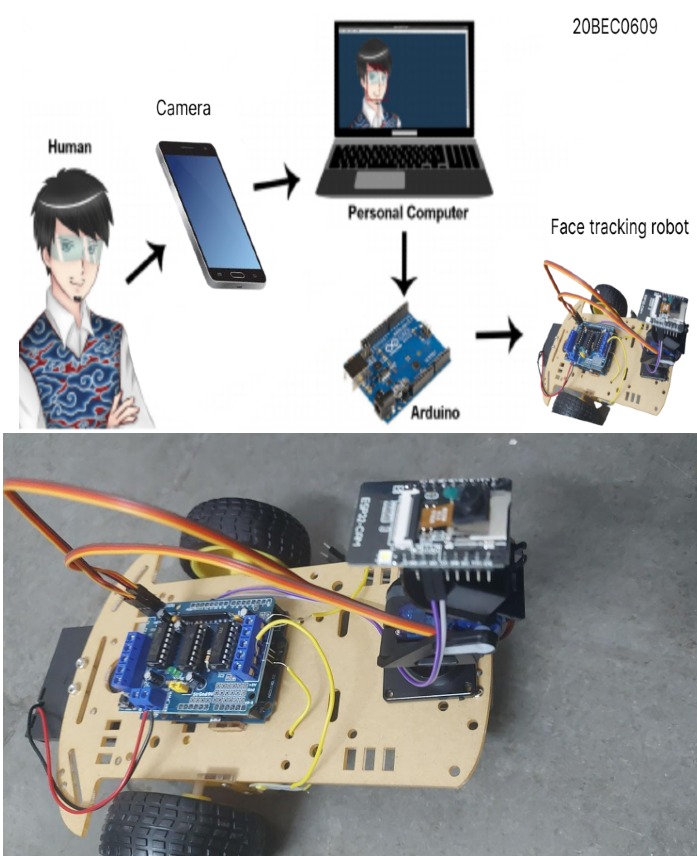

Face Tracking Robot

3-Wheeled Robot with Pan-Tilt Mechanism and Vision-Based Tracking

📋 Project Overview

The Face Tracking Robot is an autonomous robotic system that uses computer vision to detect and track human faces in real-time. The robot features a 3-wheeled omnidirectional base for smooth movement and a pan-tilt camera mechanism for precise face tracking. Built using ROS2, the system demonstrates integration of perception, control, and actuation in a complete robotic application.

This project showcases practical robotics skills including sensor integration, control systems, path planning, and real-time computer vision processing, making it an excellent example of end-to-end robotic system development.

💡 Problem Statement

Building an autonomous face tracking system involves several challenges:

- Real-time Processing: Face detection and tracking must operate at video frame rates

- Robust Detection: Handling varying lighting conditions, angles, and occlusions

- Precise Control: Smooth and accurate pan-tilt movements to keep face centered

- Robot Navigation: Coordinating base movement with camera orientation

- System Integration: Seamless communication between vision, control, and actuation modules

- Latency Management: Minimizing delay between detection and robot response

⚡ Solution Approach

The system integrates multiple components:

- Face Detection: Haar cascades or deep learning-based face detection (MTCNN/YOLO)

- Face Tracking: Kalman filter for predictive tracking and smooth following

- Pan-Tilt Control: PID controllers for precise camera positioning

- Robot Base Control: Differential drive or omnidirectional control for base movement

- ROS2 Architecture: Modular nodes for vision, control, and actuation

- Coordinate Transformation: Converting image coordinates to robot frame

🛠️ Technical Implementation

Hardware Components

- Robot Base: 3-wheeled omnidirectional platform with encoders

- Camera System: USB camera or Raspberry Pi camera module

- Pan-Tilt Mechanism: Two servo motors for camera orientation

- Computing Unit: Raspberry Pi or onboard computer for processing

- Motor Controllers: PWM-based control for smooth movement

Software Architecture

- ROS2 Nodes: Modular architecture with separate nodes for each component

- Vision Node: OpenCV-based face detection and tracking

- Control Node: PID controllers for pan-tilt and base movement

- Actuation Node: Motor control and servo positioning

- Topic Communication: Camera images, face coordinates, and control commands

- TF2: Coordinate frame transformations between camera and robot base

Control Algorithms

- Face Detection: Haar cascades or deep learning models for robust detection

- Kalman Filtering: Predictive tracking to handle temporary occlusions

- PID Control: Proportional-Integral-Derivative controllers for smooth tracking

- Coordinate Mapping: Image pixel coordinates to pan-tilt angles

- Velocity Control: Smooth velocity commands for base movement

🏆 Key Achievements

- ● Real-time face tracking at 30 FPS

- ● Smooth and responsive pan-tilt movements

- ● Robust tracking under varying lighting conditions

- ● Successful integration of vision, control, and actuation

- ● Modular ROS2 architecture for easy extension

💡 Challenges Overcome

- ● Synchronizing multiple control loops (pan, tilt, base)

- ● Handling face detection failures and occlusions

- ● Calibrating camera-to-robot coordinate transformations

- ● Optimizing PID parameters for smooth tracking

- ● Managing computational resources for real-time processing

📚 Key Learnings

- ROS2 Development: Building modular robotic systems with ROS2

- Computer Vision: Real-time face detection and tracking techniques

- Control Systems: PID control implementation for robotic applications

- Robotic Integration: Combining perception, planning, and actuation

- Coordinate Systems: Understanding and managing multiple reference frames

- Real-time Systems: Optimizing for low-latency performance

🚀 Future Enhancements

- Multi-face tracking and person re-identification

- Deep learning-based face recognition for personalized interaction

- Autonomous navigation to follow a person

- Gesture recognition for human-robot interaction

- Integration with SLAM for mapping and navigation

- Voice commands for hands-free control

- Mobile app for remote monitoring and control